Overview

Introduction to database optimization

Database optimization techniques are essential for improving the performance and efficiency of database systems. By implementing these techniques, organizations can enhance query execution, reduce response times, and optimize resource utilization. In this article, we will compare three popular database management systems: MySQL, SQL Server, and PostgreSQL, to understand the different approaches they take towards database optimization. We will explore the strengths and weaknesses of each system, and highlight the key features and optimizations they offer. By the end of this article, you will have a comprehensive understanding of the various database optimization techniques employed by these systems, allowing you to make informed decisions when choosing the right database management system for your organization.

Importance of database optimization

Database optimization plays a crucial role in the performance and efficiency of any system. It involves various techniques and strategies to improve the speed, reliability, and scalability of a database. By optimizing the database, organizations can enhance their overall productivity, reduce costs, and provide a better user experience. In the context of MySQL, SQL Server, and PostgreSQL, understanding the importance of database optimization is essential for making informed decisions about which database management system to use. This article aims to compare the optimization techniques employed by these three popular database systems and highlight their strengths and weaknesses.

Key factors to consider in database optimization

Database optimization is a crucial aspect to consider when working with different database management systems. It involves improving the performance and efficiency of the database by implementing various techniques. When comparing MySQL, SQL Server, and PostgreSQL, there are several key factors to consider in database optimization. These factors include indexing, query optimization, caching, and hardware considerations. Indexing plays a vital role in improving query performance by creating indexes on frequently accessed columns. Query optimization involves analyzing and modifying queries to ensure they are executed efficiently. Caching helps reduce the load on the database by storing frequently accessed data in memory. Hardware considerations involve selecting the appropriate hardware for the database server to ensure optimal performance. By considering these factors, developers and administrators can make informed decisions when optimizing their databases.

MySQL Optimization Techniques

Indexing strategies in MySQL

Indexing strategies in MySQL play a crucial role in optimizing database performance. By carefully designing and implementing effective indexing techniques, developers can significantly improve query execution time and overall system efficiency. One important aspect of indexing in MySQL is the ability to create indexes on multiple columns, allowing for more efficient retrieval of data based on different combinations of search criteria. Additionally, MySQL offers various types of indexes, such as B-tree and hash indexes, each with its own advantages and use cases. It is also worth considering the impact of indexing on write operations, as excessive indexing can lead to slower insertion and update operations. Overall, understanding and implementing appropriate indexing strategies in MySQL is essential for achieving optimal database performance.

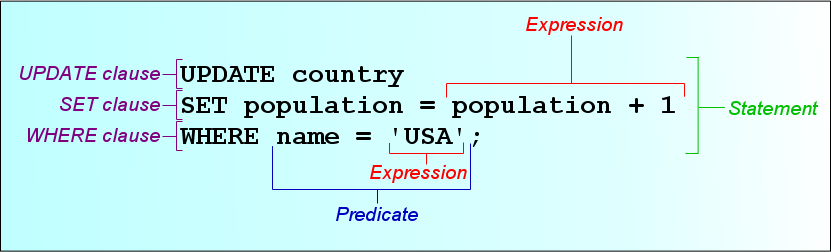

Query optimization in MySQL

Query optimization is a crucial aspect of database management systems. In MySQL, there are several techniques that can be employed to improve the performance of queries. One such technique is the use of database patches. Database patches are updates or modifications made to the database software to fix bugs, improve functionality, or enhance performance. These patches can address specific query optimization issues and provide optimizations tailored to the MySQL environment. By applying the appropriate patches, database administrators can ensure that their MySQL database is optimized for query execution and delivers optimal performance.

Caching techniques in MySQL

Caching techniques in MySQL play a crucial role in optimizing database performance. By storing frequently accessed data in memory, MySQL reduces the need for disk I/O operations, resulting in faster query execution times. One of the key caching techniques used in MySQL is query caching, which stores the results of frequently executed queries in memory. This allows subsequent identical queries to be retrieved directly from the cache, eliminating the need for executing the query again. Additionally, MySQL also supports caching at the table level, where entire tables or parts of tables can be cached in memory. This improves the performance of read-intensive operations, as the data can be quickly retrieved from the cache. Overall, the caching techniques in MySQL provide an efficient way to enhance the performance of database operations.

SQL Server Optimization Techniques

Indexing strategies in SQL Server

In SQL Server, indexing strategies play a crucial role in optimizing database performance. By creating appropriate indexes, developers can significantly improve query execution time and enhance overall data retrieval efficiency. SQL Server offers various indexing techniques, including clustered and non-clustered indexes, to support different data access patterns and query requirements. Clustered indexes determine the physical order of data in a table, while non-clustered indexes provide a separate structure that references the table’s data. These indexing strategies enable SQL Server to quickly locate and retrieve the requested data, resulting in faster data insights.

Query optimization in SQL Server

Query optimization in SQL Server is a crucial aspect of database performance. It involves improving the efficiency and speed of queries to ensure optimal execution. One important technique for query optimization is database monitoring. Database monitoring allows administrators to track the performance of SQL Server, identify bottlenecks, and make informed decisions to optimize queries. By monitoring key metrics such as CPU usage, memory utilization, and disk I/O, administrators can pinpoint areas of improvement and take appropriate actions. With effective database monitoring, organizations can enhance the overall performance of their SQL Server databases and provide a better user experience.

Performance tuning in SQL Server

Performance tuning in SQL Server is a crucial aspect of database optimization. It involves analyzing and improving the performance of the SQL Server database to ensure efficient and smooth operation. One of the important techniques used in performance tuning is MySQL bottleneck analysis. By identifying and addressing the bottlenecks in the MySQL database, it is possible to enhance its performance and optimize its overall efficiency. This process involves identifying resource-intensive queries, optimizing indexes, and fine-tuning the database configuration. By implementing effective performance tuning strategies, SQL Server can deliver faster query execution, improved response times, and enhanced scalability.

PostgreSQL Optimization Techniques

Indexing strategies in PostgreSQL

Indexing is a crucial aspect of database optimization. In PostgreSQL, there are various strategies available for creating indexes to improve query performance. One of the important indexing strategies in PostgreSQL is the use of B-tree indexes. B-tree indexes are versatile and efficient, making them suitable for a wide range of query patterns. Another indexing strategy in PostgreSQL is the use of Hash indexes, which are particularly useful for equality and range queries. Additionally, PostgreSQL supports the creation of GiST (Generalized Search Tree) indexes, which are suitable for complex data types and spatial data. By carefully selecting and implementing the appropriate indexing strategies, developers can significantly improve the performance of their PostgreSQL databases.

Query optimization in PostgreSQL

Query optimization in PostgreSQL is a crucial aspect of improving database performance. PostgreSQL provides various techniques for optimizing queries, such as indexing, query rewriting, and statistics analysis. Indexing allows for faster data retrieval by creating data structures that facilitate efficient searching. Query rewriting involves transforming queries into more efficient forms, often by using join elimination or subquery flattening. Statistics analysis helps the query optimizer make informed decisions about query execution plans. By utilizing these techniques, developers can significantly enhance the speed and efficiency of their PostgreSQL databases.

Concurrency control in PostgreSQL

Concurrency control is a crucial aspect of any enterprise database system. In PostgreSQL, concurrency control mechanisms ensure that multiple transactions can safely access and modify the database concurrently. This is achieved through a combination of locking and isolation levels. PostgreSQL offers various locking mechanisms such as row-level locking, table-level locking, and advisory locking. These mechanisms allow for efficient handling of concurrent transactions and prevent data inconsistencies. Additionally, PostgreSQL supports multiple isolation levels, including Read Committed, Repeatable Read, and Serializable, which provide different levels of data consistency and isolation. The concurrency control features in PostgreSQL make it a reliable and robust choice for handling concurrent access in enterprise database environments.

Comparison of MySQL, SQL Server, and PostgreSQL

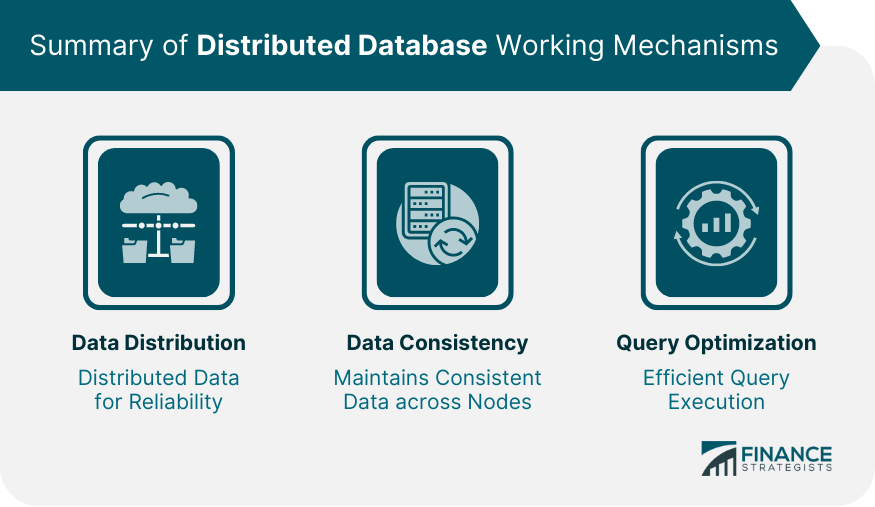

Performance comparison of the three databases

When it comes to performance, MySQL, SQL Server, and PostgreSQL are three popular database management systems that are often compared. Each of these databases has its own strengths and weaknesses, making them suitable for different types of applications. In order to determine which database is the best choice for a particular project, it is important to compare their performance in terms of speed, scalability, and reliability. This article will provide a comprehensive performance comparison of MySQL, SQL Server, and PostgreSQL, highlighting their key features and differences.

Scalability comparison of the three databases

Scalability is a crucial factor when comparing database optimization techniques of MySQL, SQL Server, and PostgreSQL. The ability of a database to handle increasing workloads and growing data volumes is essential for the smooth and efficient operation of any application. Ongoing database optimization plays a significant role in ensuring that these three databases can scale effectively and meet the evolving demands of modern applications. By continuously fine-tuning performance, improving query execution, and implementing efficient indexing strategies, organizations can maximize the scalability of their chosen database solution.

Ease of use comparison of the three databases

When comparing the ease of use of MySQL, SQL Server, and PostgreSQL, several factors come into play. MySQL is known for its simplicity and user-friendly interface, making it a popular choice for beginners. SQL Server, on the other hand, offers a comprehensive set of tools and features that cater to the needs of enterprise-level applications. PostgreSQL, known for its robustness and flexibility, provides advanced features and support for complex queries. Each database has its own strengths and weaknesses when it comes to ease of use, and the choice ultimately depends on the specific requirements and preferences of the user.

Conclusion

Summary of findings

In summary, after comparing the database optimization techniques of MySQL, SQL Server, and PostgreSQL, it can be concluded that achieving optimum database performance is crucial for any organization. MySQL, known for its simplicity and high performance, is a popular choice for small to medium-sized applications. SQL Server, with its robust features and integration with the Microsoft ecosystem, is well-suited for enterprise-level applications. PostgreSQL, renowned for its advanced features and scalability, is a reliable option for large-scale applications. By implementing the appropriate optimization techniques for each database system, organizations can ensure efficient data storage, retrieval, and processing, ultimately leading to improved overall performance.

Recommendations for choosing the right database

When it comes to choosing the right database for your needs, there are several factors to consider. One of the most important considerations is the performance of the database. In this regard, it is worth comparing the optimization techniques of MySQL, SQL Server, and PostgreSQL. Each of these databases has its own strengths and weaknesses when it comes to performance. However, it is also important to consider other factors such as scalability, ease of use, and cost. Another database that should be considered in the evaluation process is the Oracle database, which is known for its high performance and robustness. By carefully evaluating the performance, scalability, ease of use, and cost of these databases, you can make an informed decision and choose the right database for your specific needs.

Future trends in database optimization

Future trends in database optimization focus on finding innovative ways to optimize database operations. As technology continues to advance, there is a growing need to improve the efficiency and performance of database systems. One key area of focus is optimizing database operations to minimize latency and maximize throughput. This involves implementing advanced indexing techniques, utilizing query optimization algorithms, and leveraging in-memory computing. Additionally, the use of machine learning and artificial intelligence is becoming increasingly popular in database optimization, allowing for automated tuning and adaptive query optimization. Overall, the future of database optimization holds great potential for improving the speed, scalability, and reliability of database systems.

In conclusion, OptimizDBA Database Optimization Consulting is the trusted industry leader in remote DBA services. With over 500 clients and a track record of delivering transaction speeds that are at least twice as fast as before, we guarantee a significant increase in performance. Our average speeds are often 100 times, 1000 times, or even higher! If you’re looking to optimize your database and experience a substantial boost in speed, contact OptimizDBA today. Visit our website to learn more about our services and how we can help you achieve optimal database performance.