Mastering Database Performance: Key Strategies for PostgreSQL

Overview

Introduction to PostgreSQL

PostgreSQL is a powerful open-source relational database management system. It is known for its robustness, scalability, and extensibility. With its rich feature set and support for advanced SQL queries, PostgreSQL is widely used in enterprise applications. One of the key strategies for optimizing the performance of PostgreSQL is indexing. Indexes help to speed up query execution by allowing the database to quickly locate the data based on specific columns. By creating appropriate indexes on frequently queried columns, you can significantly improve the performance of your PostgreSQL database.

Importance of Database Performance

Database performance plays a crucial role in the overall success of an application. A well-performing database ensures faster query execution, reduces downtime, and improves user experience. Regular database maintenance is one of the key strategies to optimize database performance. It involves tasks such as index optimization, database backup, and data purging. By regularly maintaining the database, you can identify and fix performance bottlenecks, prevent data corruption, and ensure data integrity. With a well-maintained database, you can provide a reliable and efficient application to your users.

Key Performance Metrics

When it comes to database performance, there are several key metrics that need to be monitored and optimized. These metrics provide valuable insights into the overall health and efficiency of the PostgreSQL database. Some of the important metrics include:

- Response Time: The time taken by the database to respond to a query.

- Throughput: The number of queries processed per unit of time.

- Concurrency: The ability of the database to handle multiple queries simultaneously.

- CPU Usage: The percentage of CPU resources utilized by the database.

By monitoring and optimizing these metrics, organizations can ensure that their PostgreSQL database is performing at its best. Additionally, optimizing data security measures such as encryption and access controls can further enhance the performance and reliability of the database.

Database Design Optimization

Normalization and Denormalization

Normalization and denormalization are two key strategies used in database design to optimize performance and efficiency. Normalization is the process of organizing data in a database to eliminate redundancy and improve data integrity. It involves breaking down a database into smaller, more manageable tables and establishing relationships between them. On the other hand, denormalization is the process of combining tables and duplicating data to improve query performance. It involves storing redundant data in order to reduce the number of joins required for complex queries. Both normalization and denormalization have their advantages and disadvantages, and the choice between them depends on the specific requirements of the application. For SQL for business operations, normalization is generally preferred as it ensures data consistency and integrity.

Indexing Strategies

When it comes to optimizing database performance, indexing plays a crucial role. By creating indexes on the appropriate columns, you can significantly improve query performance and reduce the time it takes to retrieve data. PostgreSQL offers various indexing strategies, each suitable for different scenarios. Some of the commonly used indexing strategies in PostgreSQL include B-tree, Hash, GiST, and GIN. Choosing the right indexing strategy depends on factors such as the type of data, the type of queries performed, and the size of the database. For example, if you have a large dataset with frequent equality and range queries, a B-tree index would be a good choice. On the other hand, if you are dealing with complex data types like arrays or full-text search, a GiST or GIN index would be more appropriate. It's important to analyze your workload and understand the characteristics of your data to make informed decisions about indexing strategies. While PostgreSQL provides a wide range of indexing options, it's essential to evaluate and fine-tune your indexes regularly to ensure optimal performance.

Partitioning and Sharding

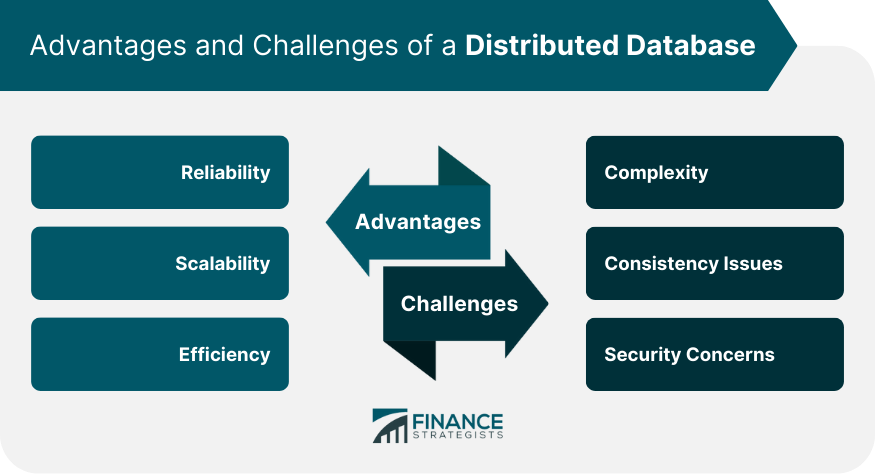

Partitioning and sharding are two key strategies for improving database performance in PostgreSQL. Partitioning involves dividing a large table into smaller, more manageable pieces called partitions. Each partition contains a subset of the data and has its own index, making queries faster and more efficient. Sharding, on the other hand, involves distributing the data across multiple servers or nodes. Each server or node is responsible for a portion of the data, reducing the load on a single server and allowing for better scalability. Both partitioning and sharding have their pros and cons. Partitioning can improve query performance and simplify data management, but it can also introduce complexity and require careful planning. Sharding can provide high scalability and fault tolerance, but it can also increase complexity and introduce data consistency challenges. It's important to carefully consider the specific requirements of your application before deciding on the best approach.

Query Optimization Techniques

Understanding Query Execution Plans

Query execution plans play a crucial role in optimizing database performance. They provide insights into how a query is executed and help identify potential bottlenecks. By understanding the different components of a query execution plan, developers and database administrators can make informed decisions to improve query performance. Industry Transformation is driving the need for faster and more efficient database systems, making it essential to master the art of query optimization. In order to achieve optimal performance, it is important to analyze the query execution plan, identify areas for improvement, and implement strategies such as index optimization, query rewriting, and caching. By adopting these key strategies, organizations can ensure their PostgreSQL databases are running at peak performance, enabling them to meet the demands of today's data-intensive applications.

Query Rewriting and Optimization

Query rewriting and optimization are crucial techniques for improving the performance of PostgreSQL databases. Query rewriting involves modifying the structure or logic of a query to achieve better execution plans. This can include rewriting subqueries, restructuring joins, or simplifying complex expressions. Optimization focuses on improving the efficiency of query execution by selecting the most appropriate indexes, creating materialized views, or using query hints. By applying query rewriting and optimization techniques, developers and database administrators can significantly enhance the performance of their applications and ensure faster response times for data retrieval and data updates.

Effective Use of Joins and Subqueries

When it comes to database optimization, one of the key strategies is to effectively use joins and subqueries. Joins allow you to combine data from multiple tables based on a common column, while subqueries enable you to nest queries within another query. By utilizing joins and subqueries efficiently, you can reduce the number of queries executed and minimize the amount of data transferred between the database and the application. This leads to improved performance and faster response times. Additionally, optimizing join and subquery performance involves selecting appropriate join types, creating indexes on join columns, and using appropriate join conditions. By following these best practices, you can maximize the efficiency of your PostgreSQL database and achieve optimal performance.

Performance Monitoring and Tuning

Monitoring Tools and Techniques

Monitoring the performance of a PostgreSQL database is crucial for ensuring optimal data analytics. There are several tools and techniques available that can help in monitoring and analyzing the performance of a PostgreSQL database. Some of the popular monitoring tools include pg_stat_statements, pg_stat_activity, and pg_stat_bgwriter. These tools provide valuable insights into various aspects of the database, such as query performance, resource usage, and transaction details. In addition to monitoring tools, techniques like query optimization, index tuning, and database configuration tuning can also play a significant role in improving the performance of a PostgreSQL database.

Identifying and Resolving Bottlenecks

When it comes to optimizing database performance, key competencies for database administrators include understanding the underlying architecture, monitoring system resources, and identifying and resolving bottlenecks. Bottlenecks can occur at various levels such as CPU, memory, disk I/O, or network. By closely monitoring these resources and analyzing performance metrics, administrators can pinpoint the root cause of bottlenecks and take appropriate actions to resolve them. Some common strategies for resolving bottlenecks include optimizing queries, tuning database parameters, scaling hardware resources, and implementing caching mechanisms. It is essential for database administrators to have a deep understanding of the database system and its components to effectively identify and address performance bottlenecks.

Optimizing Configuration Parameters

When it comes to database performance, optimizing configuration parameters plays a crucial role. PostgreSQL provides a wide range of configuration options that can be fine-tuned to enhance the performance of your database. Some of the key configuration parameters to consider for performance optimization include:

- shared_buffers: This parameter determines the amount of memory allocated to PostgreSQL for caching data pages. Increasing this value can improve performance by reducing disk I/O.

- work_mem: This parameter controls the amount of memory used for internal sort operations and hash tables. Adjusting this value can optimize sorting and join operations.

- max_connections: This parameter limits the number of concurrent connections to the database. Setting an appropriate value can prevent resource contention and improve performance.

By carefully configuring these parameters, you can significantly boost the performance of your PostgreSQL database.

Conclusion

Summary of Key Strategies

In this article, we have discussed several key strategies for improving the performance of PostgreSQL databases. These strategies include optimizing query performance, implementing proper indexing techniques, and tuning the database configuration settings. By following these best practices, businesses can achieve significant improvements in database performance, resulting in better response times, increased scalability, and ultimately, a Business Advantage. It is important to regularly monitor and analyze the database performance to identify any bottlenecks or areas for improvement. Additionally, considering the use of advanced features such as parallel query execution and query optimization tools can further enhance the performance of PostgreSQL databases.

Benefits of Implementing Performance Optimization

Implementing performance optimization techniques in PostgreSQL can provide numerous benefits for your database. By optimizing your database, you can improve query performance and reduce response times, resulting in faster and more efficient data retrieval. This can have a significant impact on the overall performance of your application, allowing it to handle larger workloads and scale effectively. Additionally, performance optimization can help minimize downtime by identifying and resolving bottlenecks and performance issues before they become critical. By implementing strategies such as indexing, query optimization, and database tuning, you can ensure that your PostgreSQL database operates at its peak performance, delivering optimal results for your applications and users.

Next Steps for Database Performance Improvement

To further optimize the performance of your PostgreSQL database, consider implementing indexing. Indexing allows the database to quickly locate and retrieve data based on specific columns, improving query performance. Additionally, you can also analyze and optimize your queries by using the EXPLAIN command to identify bottlenecks and make necessary adjustments. Another strategy is to regularly monitor and tune the configuration parameters of your PostgreSQL instance to ensure optimal performance. Finally, consider partitioning large tables to distribute data across multiple physical storage devices, reducing I/O contention and improving overall performance.

In conclusion, OptimizDBA Database Optimization Consulting is the trusted industry leader in remote DBA services. With over 500 clients and a track record of delivering transaction speeds that are at least twice as fast as before, we guarantee a significant increase in performance. Our average speeds are often 100 times, 1000 times, or even higher! If you're looking to optimize your database and experience improved transaction speeds, contact OptimizDBA today. Visit our website to learn more about our services and how we can help you achieve optimal database performance.