Overview

What is data corruption?

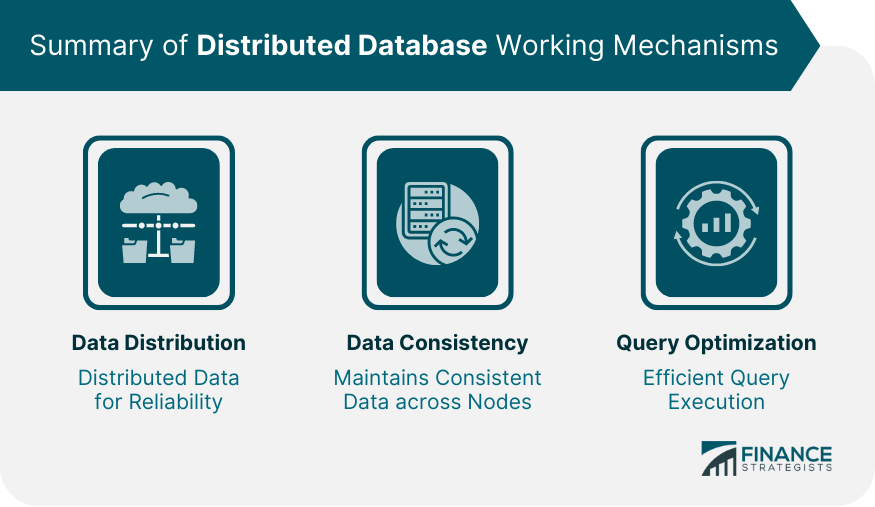

Data corruption refers to the process of errors or changes in data that result in the loss of integrity and reliability. It can occur due to various factors, such as software bugs, hardware failures, or human errors. Database Optimization is a crucial aspect of preventing data corruption. By optimizing the database, organizations can improve performance, reduce the risk of errors, and ensure data integrity. Database optimization techniques involve analyzing the database structure, indexing, query optimization, and regular maintenance. These practices help in minimizing the chances of data corruption and maintaining a healthy and reliable database.

Importance of database integrity

Database integrity is of utmost importance in ensuring the reliability and accuracy of data. It plays a crucial role in maintaining the consistency and validity of information stored in a database. Without proper database integrity measures, data corruption can occur, leading to significant consequences for businesses and organizations. By ensuring database integrity, valuable insights can be derived from the data, enabling informed decision-making and strategic planning. Implementing best practices for database integrity is essential for safeguarding data and maintaining its integrity throughout its lifecycle.

Common causes of data corruption

Data corruption can occur due to various reasons. Some of the common causes of data corruption include hardware failures, software bugs, power outages, and human errors. However, one of the key factors that can lead to data corruption is not optimizing database performance. When a database is not properly optimized, it can result in slow query execution, inefficient use of resources, and increased chances of data corruption. Therefore, it is crucial to implement best practices for optimizing database performance to prevent data corruption.

Backup and Recovery

Regular backups

Regular backups are essential for ensuring database integrity and preventing data corruption. By regularly backing up your database, you can protect against accidental data loss, hardware failures, and other unforeseen events. Additionally, backups provide a means to recover data in the event of a system failure or corruption. Implementing a robust backup strategy is a crucial aspect of database management and should be a priority for any organization. By following best practices for database integrity, such as performing regular backups, you can ensure the long-term stability and reliability of your database.

Testing backups

When it comes to testing backups, one crucial aspect to consider is database indexing. Database indexing plays a vital role in ensuring the integrity and performance of a database. It involves creating data structures that allow for efficient data retrieval and storage. By properly indexing the database, organizations can optimize query performance and reduce the risk of data corruption. It is essential to regularly test the backups to ensure that the indexing is preserved and functioning correctly. This helps in maintaining the integrity of the database and minimizing the chances of data corruption.

Implementing a disaster recovery plan

Implementing a disaster recovery plan is crucial for ensuring the integrity of a database. In the event of a data corruption or loss, having a well-defined plan in place can minimize downtime and prevent significant damage to the business. One of the key considerations when implementing a disaster recovery plan is the choice of database management system. MySQL 8.0, with its new features and enhancements, offers improved reliability and performance, making it an excellent choice for organizations seeking to safeguard their data. With features such as improved replication, enhanced security, and faster recovery times, MySQL 8.0 provides a robust foundation for disaster recovery strategies. By leveraging the new features in MySQL 8.0, businesses can establish a comprehensive disaster recovery plan that ensures the integrity and availability of their critical data.

Data Validation

Input validation

Input validation is a crucial step in preventing data corruption and maintaining database integrity. By validating user input, businesses can ensure that only valid and expected data is stored in the database. This process involves checking the input data against predefined rules and constraints to ensure its accuracy and reliability. Implementing robust input validation mechanisms helps in preventing various types of security vulnerabilities, such as SQL injection and cross-site scripting attacks. Additionally, input validation plays a vital role in safeguarding the integrity of the database and maintaining the quality of the data stored within. It is an essential aspect of business transformation, as accurate and reliable data is vital for making informed decisions and driving organizational growth.

Data type validation

Data type validation is a crucial step in ensuring the integrity of a database. It involves verifying that the data being entered into a database is of the correct type. This helps prevent data corruption and ensures that the database remains consistent and reliable. By validating the data types, organizations can avoid issues such as incorrect calculations, data loss, and system crashes. Implementing data type validation also helps in improving data quality and accuracy. It is essential to define and enforce proper data type validation rules to maintain the integrity of the database.

Cross-field validation

Cross-field validation is a crucial step in ensuring the integrity of a database. It involves validating the relationships and dependencies between different fields in a record. By performing cross-field validation, data inconsistencies and errors can be identified and prevented. This process helps maintain the accuracy and reliability of the database, ensuring that the data stored is valid and consistent. Implementing cross-field validation best practices is essential for preventing data corruption and maintaining the overall integrity of the database.

Access Control

User authentication

User authentication is a critical aspect of ensuring the security and integrity of a database. It involves verifying the identity of users and granting them appropriate access privileges. By implementing strong authentication mechanisms, such as multi-factor authentication and password hashing, organizations can significantly reduce the risk of unauthorized access and data corruption. Additionally, regular audits and monitoring of user authentication processes can help identify and address any potential vulnerabilities or breaches. Overall, user authentication plays a vital role in maintaining the integrity of a database and should be implemented with utmost care and attention to detail.

Role-based access control

Role-based access control is a crucial aspect of maintaining database integrity. By implementing role-based access control, organizations can ensure that only authorized users have access to specific data and functionalities within a database. This helps prevent data corruption and unauthorized modifications. Database tuning is an important practice that complements role-based access control. It involves optimizing the performance and efficiency of a database system by adjusting various parameters and configurations. By fine-tuning the database, organizations can enhance its responsiveness, reduce latency, and improve overall system performance.

Audit trails

Audit trails are an essential component of database integrity, providing a detailed record of all changes made to a database. These trails serve as a valuable tool for identifying and resolving data corruption issues. By tracking every transaction, audit trails enable organizations to trace any changes back to their source, ensuring accountability and transparency. Additionally, audit trails play a crucial role in query performance optimization. They provide valuable insights into the efficiency of database operations, allowing administrators to identify and address any bottlenecks or inefficiencies. With the ability to analyze and optimize query performance, organizations can enhance the overall speed and responsiveness of their database systems.

Monitoring and Maintenance

Database monitoring

Database monitoring is a crucial aspect of ensuring the integrity and security of a database. It involves the continuous surveillance and analysis of database activities to detect and prevent any potential issues or threats. By monitoring the database, organizations can identify and resolve performance bottlenecks, optimize resource allocation, and improve overall system efficiency. Effective database monitoring plays a vital role in maintaining data consistency, availability, and reliability. It helps in identifying and rectifying data corruption, minimizing downtime, and ensuring data integrity. Additionally, database monitoring enables organizations to proactively identify and address potential security vulnerabilities, thereby safeguarding sensitive information from unauthorized access or manipulation.

Performance optimization

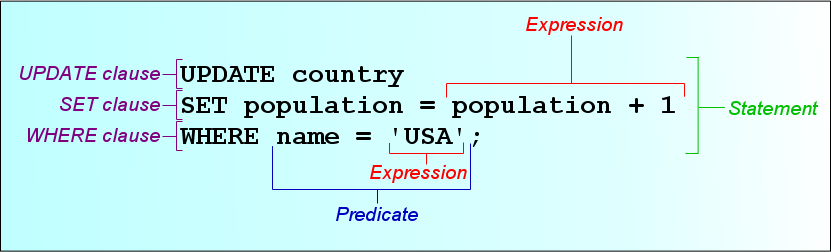

Performance optimization is a crucial aspect of maintaining a high-performing database system. By implementing effective strategies, organizations can ensure that their databases operate efficiently and deliver optimal performance. One key aspect of performance optimization is query optimization, which involves analyzing and fine-tuning database queries to minimize execution time and resource usage. Another important consideration is index optimization, where indexes are created and maintained to improve query performance. Additionally, database administrators can optimize performance by regularly monitoring and tuning the database system, identifying and resolving any bottlenecks or inefficiencies. By prioritizing performance optimization, organizations can enhance the overall efficiency and responsiveness of their database systems, ultimately leading to improved user experience and satisfaction.

Regular maintenance tasks

Regular maintenance tasks are essential for ensuring the integrity of a database. One crucial aspect of maintenance is optimizing queries. The query optimizer plays a vital role in improving the performance of database queries. By analyzing the structure of a query and the available indexes, the optimizer determines the most efficient execution plan. This process involves selecting the best algorithms and access methods to retrieve the required data. Regularly optimizing queries helps to minimize response times, reduce resource consumption, and ensure smooth database operations.

Conclusion

Summary of best practices

Data corruption is a serious concern for any organization that relies on databases to store and manage critical information. To ensure database integrity and prevent data corruption, it is essential to follow a set of best practices. This article provides a summary of the key practices that should be implemented to maintain data integrity. By adhering to these guidelines, organizations can minimize the risk of data corruption and ensure the reliability and accuracy of their databases.

Importance of proactive measures

Data corruption can have severe consequences for businesses, leading to loss of critical information, compromised data integrity, and decreased productivity. It is crucial for organizations to implement proactive measures to prevent data corruption and maintain database integrity. By taking proactive steps, such as regular data backups, implementing data validation checks, and conducting routine maintenance tasks, businesses can minimize the risk of data corruption and ensure the reliability and accuracy of their databases. These proactive measures help in identifying and resolving potential issues before they can cause significant damage. By prioritizing database integrity, organizations can safeguard their data and maintain the trust of their customers and stakeholders.

Continuous improvement

Continuous improvement is crucial in maintaining the integrity of a database. One key aspect of continuous improvement is data organization. Properly organizing data ensures efficient data retrieval and minimizes the risk of data corruption. By implementing best practices for data organization, such as using consistent naming conventions and logical data structures, organizations can enhance database integrity and facilitate smooth data operations. Continuous monitoring and regular audits also play a vital role in identifying and rectifying any potential issues in the database. By continuously improving data organization and implementing proactive measures, organizations can mitigate the risk of data corruption and ensure the long-term integrity of their databases.

In conclusion, OptimizDBA Database Optimization Consulting is the trusted industry leader in remote DBA services. With over 500 clients and a track record of delivering transaction speeds that are at least twice as fast as before, we guarantee a significant increase in performance. Our average speeds are often 100 times, 1000 times, or even higher! If you’re looking to optimize your database and experience unparalleled speed, contact OptimizDBA today. Visit our website to learn more about our services and how we can help you achieve optimal database performance.