Getting into database optimization as a beginner can be overwhelming. We understand that. There are so many aspects to the optimization processes that database administrators carry out like: explore, design, implement and validate scenarios. It’s normal to feel intimidated by it. We have almost two decades of experience working with databases and tuning them and we have learned a lot along the way. Today, we will help you get started with some simple techniques that you can experiment with and practice. Though these techniques are simple, they are powerful and they do have the potential to significantly improve the overall database performance.

What is Database Optimization?

Database optimization is the process of analyzing various components of the database including SQL queries and tuning them to improve database performance. The initial database design, or the DB server hardware, or even the initial queries might work efficiently at the beginning. However, with time as data grows, it might start to affect the performance. Bottlenecks might appear, queries might run slowly, or you might run out of storage. These pitfalls make it imperative to regularly perform checks and fix the issues.

These tuning tasks are broken up into several units like SQL query optimization, analyzing slow queries, load monitoring, DB index analysis and optimization, schema design evaluation, and more. Database administrators and DB developers take these tasks individually and try to enhance them. One of the major tasks here is to set up monitoring systems that keep an eye on various parameters and notify the DBA when there is a problem.

Optimization Techniques

In this section, we will look at some optimization techniques that work with queries, tables, and indexes.

1. Changing The Query Structure

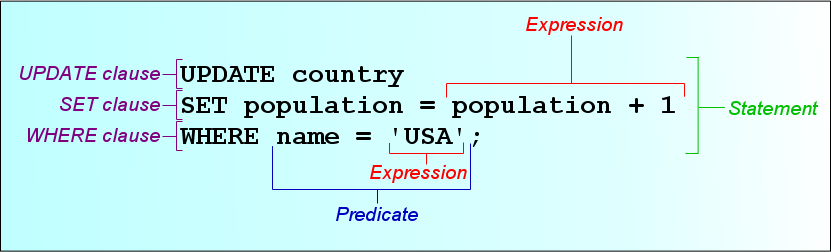

There are times when how the query is written leads to long execution times. The only way to speed it up would be to rewrite the query or make changes to it. One of the most often ignored rules that require changes is queries that use “*” to select all fields.

It’s always faster to list the columns that need to be extracted instead of using “*” which fetches all the columns increasing the execution time. Here’s an example:

Original Query:

SELECT * FROM distributor WHERE location = “Toronto” and year = 2020

Optimized Query:

SELECT name, regionCode, establishedYear, stock FROM distributor WHERE location = “Toronto” AND year = 2020

Cursors are another time-guzzling feature in SQL. They help in iterating over the rows one at a time. So, it takes far more time to go over the same number of rows than without using cursors as that many individual reads are not required. Thus, you need to ensure that you only use cursors when it’s absolutely necessary.

The use of nested SQL queries like cursors needs to be restricted to when it’s absolutely needed. A nested SQL query is a technique of using one query within another. This is used when the result of one query is used by another. The disadvantage here is that the outer query has a dependency on the inner one and the optimizer will not able to execute them in parallel increasing the overall execution time.

Another important section of database optimization is how we use the SQL inbuilt functions. SQL provides many in-built functions that perform a set of operations on the rows being searched. AVG() to find the average of some values, SUM() to find the sum of values, RIGHT() a string manipulation function to extract characters from a string are some examples of inbuilt functions. Functions need to perform some operations hence they take more time to execute than equivalent clauses in a SQL query. Here’s an example to optimize such queries:

Original SQL Query:

SELECT name, age, joinDate, salary FROM employee WHERE RIGHT(employeeId,4) = ‘2021’

Optimized SQL Query:

SELECT name, age, joinDate, salary FROM employee WHERE employeeId LIKE ‘%2021’

2. Changing the DB structure

At times, we have no option but to change the structure of the DB or the DB design to ensure the database performance expectations are met. Changes can be minor or require the entire table to be redesigned. Here, let’s look at some simple changes that can be made to the DB structure.

Pre-calculating fields

If there are operations where you need to constantly compute constant values, then it’s better to do compute them while writing it into the DB and add that value to a new column in the table. Pre-computation saves a lot of time while retrieving the data. When the dataset is small, you might not see much difference, but on large data, this will surely become prominent, and pre-computation would be the only way to decrease the processing time.

Denormalize the database

One of the tasks under database optimization that we carry out is the normalization of the DB schema. This ensures that the data redundancy is reduced and the integrity of the data increases. However, the drawback of normalization is it can create many levels of tables that start to hamper the performance of queries that need to access all the levels. In such scenarios, we need to consider denormalizing the database and combining tables. This will ensure the query has to traverse fewer tables thereby speeding up the query.

Split tables to handle large data

Certain types of data are inherently large like images or videos or sizable textual data. Read time for a query depends on the data size so, if the data size is very huge, then it becomes very difficult for the query to run efficiently. In such scenarios, we need to split the tables so that the big data is moved out of the primary table being queried.

3. Execution Plan and Caching

SQL query execution plan is the set of steps that the DB optimizer has derived that tells how the query needs to be executed. Computation of this plan is a time taking process as the database needs to evaluate many possible options and find the best one. This is where caching can help.

Database caches cannot only store the query responses but also the query plans. However, to cache the execution plan, the cache size needs to be sufficiently high. Since we have the option to change the buffer pool size, we can set it to higher values so that the execution plans can be stored as well.

Conclusion

Database optimization is surely a vast topic with a lot of aspects to consider and a lot of parameters to tweak and it can get intimidating when starting with it. However, taking things slowly one at a time will help to get a grasp on them.

Here, we have seen very simple checks and measures to optimize various parts of the database and a SQL query. As I’ve mentioned earlier, just because they are simple doesn’t mean that they aren’t used. In fact, they are very powerful and we DBAs use them often in our processes.