Overview

Importance of database backups

Database backups play a crucial role in ensuring the availability and integrity of data. They serve as a safety net, protecting against data loss caused by various factors such as hardware failures, software errors, or even natural disasters. Without proper backups, organizations risk losing valuable information and facing significant downtime. Additionally, backups enable efficient data recovery in case of accidental deletion or corruption. By regularly backing up databases, businesses can minimize the impact of potential disruptions and ensure the continuity of their operations.

Challenges in database backup and recovery

Database backup and recovery is an essential aspect of ensuring data integrity and availability. However, it comes with its own set of challenges. One of the main challenges is the increasing size of databases, which leads to longer backup and recovery times. Another challenge is the need for continuous backups to minimize data loss in case of a failure. Additionally, ensuring the security of backup files is crucial to prevent unauthorized access to sensitive data. Moreover, the complexity of database systems and the potential for human errors further complicate the backup and recovery process. Overall, addressing these challenges is crucial for optimizing database backups and ensuring efficient data recovery.

Goals of optimizing database backups

The goals of optimizing database backups are to ensure efficient data recovery and minimize downtime. By implementing strategies such as incremental backups, compression, and deduplication, organizations can reduce the amount of time and resources required for backup and recovery processes. Additionally, optimizing database backups helps to improve overall system performance by reducing the impact on production environments during backup operations. This ensures that critical data is protected and readily accessible in the event of data loss or system failure.

Understanding Database Backups

Types of database backups

Database backups are crucial for ensuring data recovery in the event of a system failure or data loss. There are several types of database backups that organizations can utilize to optimize their backup strategies. The first type is a full backup, which involves creating a complete copy of the entire database. This type of backup provides the most comprehensive data recovery option but can be time-consuming and resource-intensive. Another type is an incremental backup, which only backs up the changes made since the last backup. This approach is more efficient in terms of time and resources, as it only captures the modified data. Additionally, organizations can also consider differential backups, which capture the changes made since the last full backup. These backups are faster than full backups but require more storage space. To optimize database backups for efficient data recovery, it is important to choose the appropriate backup type based on the organization’s needs and resources. Implementing regular backup schedules, ensuring backups are stored securely, and testing the restore process are also important tips to consider.

Backup strategies and best practices

Backup strategies and best practices play a crucial role in ensuring efficient data recovery. By implementing the right backup strategies, organizations can minimize the risk of data loss and improve the overall reliability of their database systems. One of the key considerations in optimizing database backups is to determine the appropriate backup frequency. Regular backups help in capturing the latest changes and reducing the potential loss of data. Additionally, it is important to choose the right backup storage solution that offers high availability and durability. This ensures that the backups are easily accessible and can be restored quickly in case of a disaster. Another important aspect is to perform periodic backup testing to verify the integrity and completeness of the backup files. By following these backup strategies and best practices, organizations can enhance their data recovery capabilities and ensure the continuity of their business operations.

Backup tools and technologies

Backup tools and technologies play a crucial role in optimizing database backups for efficient data recovery. These tools are designed to automate the backup process, ensuring that data is securely and consistently backed up. They provide features such as incremental backups, which only backup the changes made since the last backup, reducing the amount of data that needs to be transferred and stored. Additionally, backup tools often offer compression techniques to further minimize the storage space required. Some popular backup tools and technologies include Acronis Backup, Veeam Backup & Replication, and Veritas NetBackup. These tools offer a wide range of features and capabilities to meet the needs of different organizations and ensure reliable and efficient data recovery in case of any data loss or system failures.

Analyzing Backup Performance

Measuring backup speed and efficiency

Measuring backup speed and efficiency is crucial for optimizing database backups. By carefully evaluating the time it takes to perform backups and the resources consumed during the process, organizations can identify areas for improvement and enhance their data recovery capabilities. One key aspect to consider is fault tolerance, which refers to the system’s ability to continue functioning in the event of a failure. By measuring the backup speed and efficiency, organizations can ensure that their backup systems are resilient and capable of handling unexpected failures. Additionally, fault tolerance can be enhanced by implementing strategies such as redundant backups, data mirroring, and automated failover mechanisms. These measures help minimize downtime and ensure that data can be quickly restored in the event of a disaster. To achieve optimal backup speed and efficiency, organizations should regularly monitor and evaluate their backup processes, making necessary adjustments and improvements as needed.

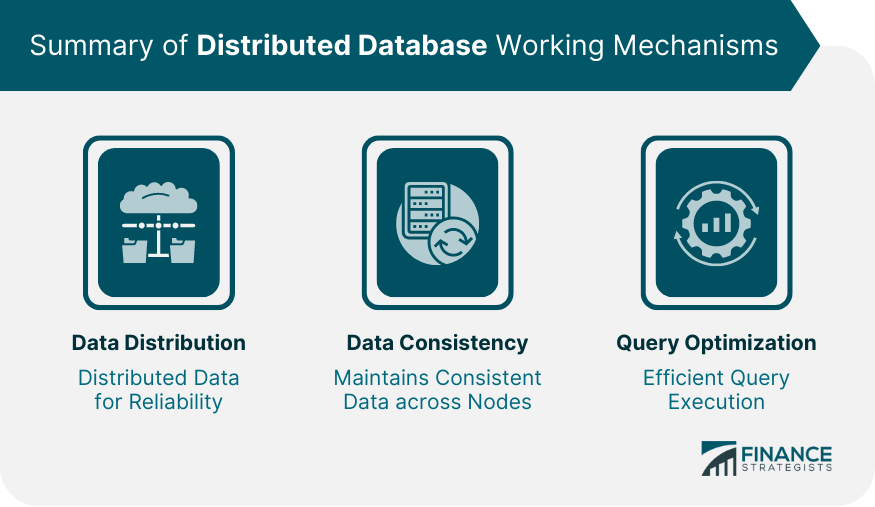

Identifying bottlenecks in backup process

Identifying bottlenecks in the backup process is crucial for optimizing database backups and ensuring efficient data recovery. One of the key factors to consider is the database design. A well-designed database can significantly improve the backup process by reducing the time and resources required for backups. By analyzing the database design, administrators can identify any inefficiencies or bottlenecks that may impact the backup performance. Additionally, it is important to consider the hardware and network infrastructure used for backups, as they can also contribute to potential bottlenecks. By addressing these bottlenecks, organizations can enhance their backup strategies and minimize the downtime associated with data recovery.

Optimizing backup performance

In the context of database management, optimizing backup performance is crucial for efficient data recovery. Performance tuning plays a vital role in ensuring that backups are completed in a timely manner and do not impact the overall system performance. By implementing various techniques such as parallel backups, compression, and incremental backups, organizations can significantly improve the speed and efficiency of their backup processes. Performance tuning also involves optimizing the backup storage infrastructure, ensuring that it can handle the volume of data being backed up and providing fast access to the backup files when needed for recovery. By prioritizing performance tuning in database backup strategies, organizations can minimize downtime and maximize the availability of critical data.

Ensuring Data Integrity

Verifying backup data integrity

Verifying backup data integrity is a crucial step in optimizing database backups for efficient data recovery. It ensures that the backup files are complete and accurate, reducing the risk of data corruption or loss during the recovery process. By performing regular integrity checks, database administrators can identify any inconsistencies or errors in the backup data and take necessary actions to rectify them. This includes verifying the checksums of the backup files, comparing the file sizes, and cross-checking the data against the original database. Additionally, verifying backup data integrity also helps in detecting any unauthorized modifications or tampering of the backup files. Overall, this step plays a vital role in ensuring the reliability and effectiveness of database backups for a smooth data recovery experience.

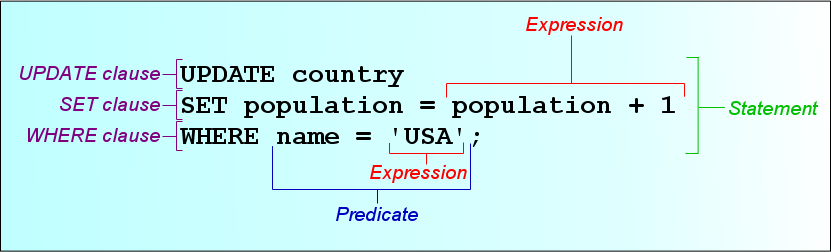

Implementing data validation techniques

Implementing data validation techniques is crucial for ensuring the integrity and accuracy of data in a database. One of the key aspects of data validation is performing checks on the input data to ensure its validity and consistency. This involves verifying the data against predefined rules or constraints, such as data types, range limits, and referential integrity. By implementing data validation techniques, organizations can prevent the insertion of erroneous or inconsistent data into the database, thereby maintaining data quality and reliability. In the context of SQL Azure, data validation becomes even more important due to the distributed nature of the cloud-based database. It is essential to validate the data before storing it in SQL Azure to avoid potential data corruption or loss. By employing robust data validation techniques, organizations can optimize their database backups for efficient data recovery, ensuring the availability and integrity of their data.

Preventing data corruption during backups

Data corruption during backups is a critical issue that needs to be addressed to ensure the integrity and reliability of the database. As a data warehouse DBA, it is crucial to implement preventive measures to minimize the risk of data corruption during the backup process. One of the key strategies is to regularly validate the backup files to detect any anomalies or errors. Additionally, it is important to use reliable backup software that supports checksum verification and error detection. Implementing a robust backup and recovery strategy can significantly reduce the chances of data corruption and ensure efficient data recovery when needed.

Streamlining Backup and Recovery Processes

Automating backup and recovery tasks

Automating backup and recovery tasks is crucial for optimizing database backups and ensuring efficient data recovery. By automating these tasks, organizations can reduce the risk of human error and improve overall system reliability. One of the key roles in automating backup and recovery tasks is the database administrator. The database administrator plays a critical role in implementing and managing backup and recovery strategies. They are responsible for configuring backup schedules, monitoring backup processes, and ensuring the integrity of backup data. Additionally, automation tools and scripts can be utilized to streamline the backup and recovery process, allowing for faster and more efficient data restoration in the event of a system failure. Overall, automating backup and recovery tasks is essential for organizations looking to optimize their database backups and minimize downtime in the event of data loss or system failure.

Implementing incremental backups

Implementing incremental backups is a crucial step in optimizing database backups for efficient data recovery. Unlike full backups, which involve copying the entire database every time, incremental backups only capture the changes made since the last backup. This approach significantly reduces the backup time and storage requirements. However, there are some cons to consider. One drawback is that restoring data from incremental backups requires applying all the incremental backups since the last full backup, which can be time-consuming. Additionally, incremental backups rely heavily on the integrity of the previous backups, as any corruption or loss of a previous backup can affect the entire chain. Therefore, it is important to regularly test the integrity of the backups and ensure a reliable backup strategy is in place.

Utilizing compression and deduplication

Utilizing compression and deduplication techniques can significantly improve the efficiency of database backups. Compression reduces the size of backup files by compressing the data, making it easier to store and transfer. Deduplication eliminates redundant data by identifying and removing duplicate copies, further reducing the backup size. These techniques not only optimize storage space but also enhance the speed of data recovery. By implementing compression and deduplication, organizations can ensure faster and more efficient backup and recovery processes, ultimately saving time and resources. In 2021, it is crucial for businesses to adopt these strategies to handle the exponentially growing volumes of data.

Conclusion

Summary of key points

In the article titled “Optimizing Database Backups for Efficient Data Recovery”, the key points discussed can be summarized as follows:

1. Optimizing database backups is crucial for efficient data recovery. By implementing strategies such as scheduling regular backups, utilizing compression techniques, and leveraging incremental backups, organizations can minimize downtime and ensure faster recovery in case of data loss.

2. SQL Server provides several features and tools that can aid in optimizing database backups. These include the ability to perform differential backups, utilizing backup compression, and leveraging backup and restore performance optimizations.

3. Organizations should also consider implementing best practices for database backup and recovery, such as testing backup and restore processes, monitoring backup performance, and regularly reviewing and updating backup strategies to adapt to changing business needs.

By optimizing database backups, organizations can enhance data recovery capabilities, minimize downtime, and ensure the continuity of critical business operations.

Importance of continuous improvement

Continuous improvement is crucial for any organization to stay competitive in today’s fast-paced business environment. It allows businesses to identify and address weaknesses in their processes, products, and services, leading to increased efficiency and customer satisfaction. By continuously improving their database backup strategies, organizations can ensure efficient data recovery in case of any unforeseen events or disasters. This not only minimizes the risk of data loss but also reduces downtime and improves overall business continuity. Implementing best practices and regularly reviewing and updating backup processes can help organizations optimize their database backups and enhance their ability to recover data quickly and effectively.

Future trends in database backup optimization

The future of database backup optimization holds promising advancements that will revolutionize the way data recovery is performed. With the rapid growth of data volumes and the increasing complexity of database systems, it is crucial to explore new approaches to ensure efficient and reliable backups. One of the emerging trends in database backup optimization is the adoption of cloud-based backup solutions. Cloud-based backups offer scalability, flexibility, and cost-effectiveness, allowing organizations to store and recover data seamlessly. Another trend is the integration of artificial intelligence and machine learning algorithms into backup processes. These technologies can analyze data patterns, identify potential risks, and optimize backup strategies in real-time. Additionally, the use of blockchain technology for secure and tamper-proof backups is gaining traction, providing an immutable and decentralized storage solution. As organizations continue to prioritize data protection and recovery, these future trends in database backup optimization will play a vital role in ensuring the integrity and availability of critical data.